Thinking Fast and Slow in the Neuro-symbolic AI World

On March 27th, the world lost a towering intellect in Daniel Kahneman, a Nobel laureate renowned for his groundbreaking work in psychology and behavioral economics. In a notable moment in December 2019, Yoshua Bengio, a pioneering figure in deep learning, spotlighted Kahneman’s dual-process theory of cognition during a keynote at NeurIPS 2019 (opens in a new tab). This theory, popularized in Kahneman’s book ”Thinking, Fast and Slow (opens in a new tab),” distinguishes between two systems of thinking.

“System 1” thinking is rapid and intuitive, drawing upon immediate memory recall and statistical pattern recognition to make quick judgments or decisions. In contrast, “System 2” thinking is slower and more methodical, and requires deliberate effort and logical reasoning. It is associated with complex problem-solving and decision-making processes that involve extended chains of reasoning and adhere to the principles of symbolic logic. For example, a simple arithmetic problem like “2+2” typically entails the fast, automatic response of System 1. On the other hand, proving the Pythagorean theorem activates System 2, as it demands a more thoughtful and analytical approach.

Interestingly, Large Language Models (LLMs) are proving adept at System 1 reasoning, efficiently handling straightforward queries. Researchers are pushing the boundaries (opens in a new tab), challenging LLMs with tasks requiring complex thought, often with notable success. However, these models are not infallible: mistakes happen, particularly with complex reasoning. This is where integrating System 2 thinking (a symbolic reasoner) could help rectify the mistakes of System 1 reasoners like LLMs.

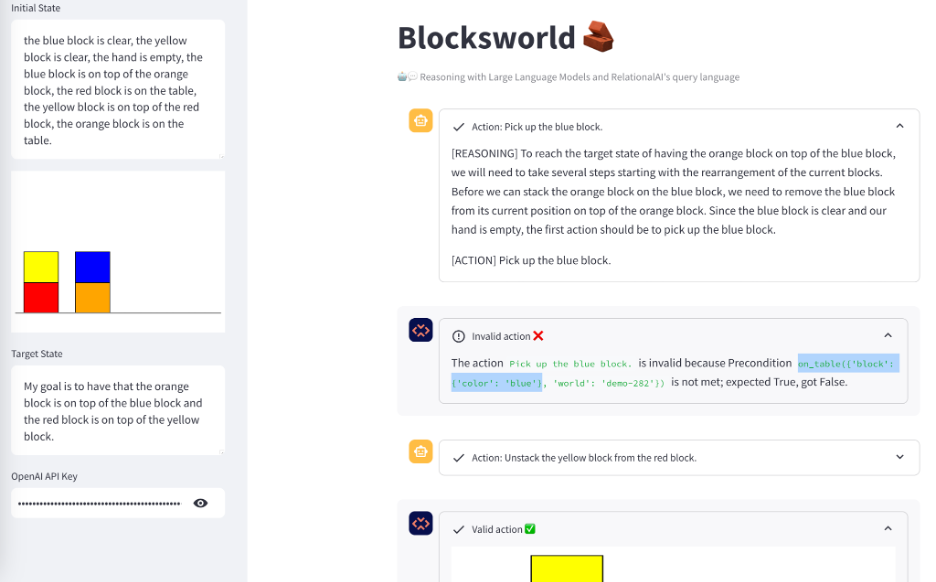

Take the Blocksworld (opens in a new tab) problem as an example, where one must rearrange a stack of blocks into a new configuration. This planning task requires a System 2 thinking. We first experimented with LLMs suggesting actions at each step, then enhanced it with a formal system (like Lean (opens in a new tab) or Coq (opens in a new tab)) to check each action’s validity. In our experiments (opens in a new tab), we used RelationalAI’s query language as the formal symbolic system. As a result, incorrect actions were corrected, illustrating how symbolic logic systems can guide LLMs when dealing with simple logical rules.

In the Blocksworld domain figure above, we can unstack blocks from on top of each other and only pick up blocks that are on the table. The LLM is understandably mistaken about this detail, but RelationalAI’s system is able to provide real-time feedback so that the LLM can correct its action and ultimately generate a valid plan.

However, real-world problems often encompass logical constraints and complex data relationships. This is where Knowledge Graphs, such as RelationalAI’s knowledge graph coprocessor for data clouds, become invaluable, providing the data management and relational capabilities needed for more sophisticated tasks more simply and at scale. The synergy between LLMs and KGs has been applied to more complex problems, such as mathematical proofs. This year’s NeurIPS gave extensive coverage on the topic, together with a tutorial (opens in a new tab) and a workshop (opens in a new tab) and you can see our analysis (opens in a new tab) on the topic too.

In the upcoming post, we’ll explore how a LLM collaborates with the RelationalAI SDK for Python to tackle complex optimization challenges.

As you can see, Daniel Kahneman, the brilliant psychologist and Nobel Prize-winning economist, has profoundly shaped our understanding of decision-making. His research continues to resonate, leaving an enduring legacy that we honor today, like the Blocksworld experiment above.

Giancarlo Fissore is a Data Scientist at RelationalAI focusing on Natural Language Processing and Large Language Models. He earned his PhD in machine learning on topics related to generative models, bringing a unique blend of self-taught programming skills and formal education to his current role. His work aims to enhance the capabilities of generative AI systems in understanding and processing human language with the help of Knowledge Graphs.

Nikolaos Vasiloglou is the VP of Research-ML at RelationalAI. He has spent his career on building ML software and leading data science projects in Retail, Online Advertising and Security. He is a member of the ICLR/ICML/NeurIPS/UAI/MLconf/KGC/IEEE S&P community, having served as an author, reviewer, and organizer of workshops and the main conference. Nikolaos is leading the research and strategic initiatives at the intersection of Large Language Models and Knowledge Graphs for RelationalAI.

About RelationalAI

RelationalAI is the industry’s first AI coprocessor for data clouds and language models. Its groundbreaking relational knowledge graph system expands data clouds with integrated support for graph analytics, business rules, optimization, and other composite AI workloads, powering better business decisions. RelationalAI is cloud-native and built with the proven and trusted relational paradigm. These characteristics enable RelationalAI to seamlessly extend data clouds and empower you to implement intelligent applications with semantic layers on a data-centric foundation.

Related Posts

An Inbound Transportation Management System in Rel

How RelationalAI’s inbound TMS, built entirely within the AI coprocessor, tackles problems faced by retailers when shipping freight.

RelationalAI to Host “The Promise of AI and Relational Knowledge Graphs” Webinar

Former Snowflake CEO Bob Muglia to join RelationalAI for a virtual event on September 28, 2023 dedicated to exploring the future of AI and relational knowledge graphs.